Thoughts on Force Touch and Haptic Feedback in OS X

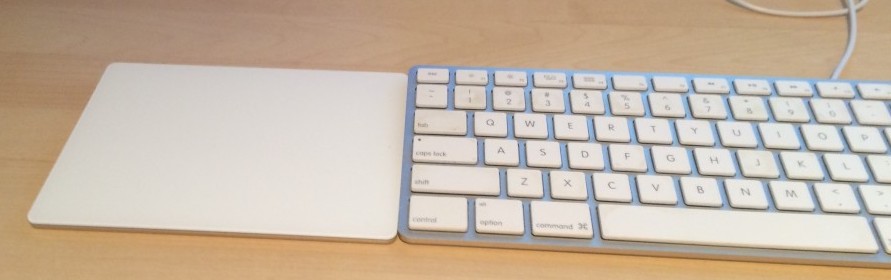

Back at the end of October I couldn’t resist getting a Magic Trackpad 2 for my iMac due to all three of its advancements over the earlier model:

- Force Touch and Haptic Feedback, the real topic of this post

- Built-in Lightning-rechargeable battery (which I have since on at least one occasion found the usefulness of the trackpad using USB for data while recharging when my iMac’s bluetooth refused to work until a reboot because I could still use a real trackpad in those minutes, even postpone the reboot if desired)

- 30% larger (which comes in handy in many ways, now that I’ve retrained my hand to remember its larger size)

In this intervening time as I’ve been at the forefront of this new input (Force Touch) and output (Haptic, or as Apple calls it Taptic, Feedback) dimension in OS X I’ve begun to recognize some of the ways Apple could expand this technology as it becomes more ubiquitous to literally add new dimensions to the OS without really needing any, or at least few, further hardware advancements.

The Taptic Engine is used most prevalently in the trackpad to mimic the “physical click” without anything moving (or, more specifically, nothing moves by what we do, things move slightly by what the trackpad itself does). The trackpad senses how much pressure your fingers put on it and when enough pressure is applied (unless you’re in a drawing environment, where it affects tool thickness in many apps much like a drawing tablet does, which is also why Apple puts the trackpad alongside such products in a feature on the Apple Store iOS app I’ve noticed) “clicks” your fingers using the Taptic Engine and sends a click command to OS X. It also introduces the deeper second-level “force click” for additional functionality on certain items in the OS. I describe this clicking with the trackpad as it clicking you instead of you clicking it. Not what we’re previously used to with input devices!

But you recognize this device as an output device when you realize that there need be no pressure put on the trackpad for it to use its Taptic Engine. In this manner it truly adds a new dimension to OS X. Apple uses this in Pages, Numbers, and Keynote alignment guides, and in iMovie to alert you to the ends of clips when editing in the Timeline. But beyond that they seem to not use it anywhere else (surprisingly, this includes in their own interface layout editor in Xcode, among other omissions). A few 3rd-party apps have added this kind of physical dimension to alignment guides, as they too have adopted the pressure-sensitive drawing functionality Apple seems to only have in the Markup extension (and its host, Preview), but really haptic feedback as pure output in OS X basically ends at that point. One thought of mine: Apple should standardize GUI [1. Graphical User Interface, for the uninitiated in software development.] elements like these alignment guides so every app both uses a recognized look for them, and, oh, can also just have “for free” haptic feedback on them. Doing such a thing would bring this dimension of output to some maturity and first-class citizenship in OS X.

Another place the Taptic Engine could be utilized across the board in OS X: The Find functionality in apps. Just think about what it would (literally) feel like if you searched for a word in Safari, or Pages, or really any app, and your finger was tapped when scrolling through the document and the searched-for phrase was on the same horizontal line with the cursor (yes, slower than the standard keyboard shortcuts, but there are times when scrolling the document to the highlighted phrases makes sense). Apple could probably do this universally if implemented in the lowest-level of content display elements (text views, web views, and such). Okay, in all likelihood Microsoft Office wouldn’t work with this given their slow adoption of standard OS X elements, but most other places you’d want this functionality would have it.

Those are both ideas that would just enhance everyone’s use of OS X, by adding a new dimension to specific areas. But one other potential use of the Taptic Engine in OS X would strive to enhance the entire experience for a specific yet important subset of users: Those who rely on the Accessibility features, and specifically those with impaired vision. At the moment, and most potential uses of haptic feedback in OS X, are, while not short-sighted, definitely compartmentalized into specific use cases. One word could be used to sum up this final Taptic-specific thought I’ve had in these first months using a Force Touch-enabled trackpad: Texture. What if everything about OS X were textured, using perhaps more subtle and specifically placed taps than the current Taptic Engine can do but still worth thinking about and striving towards, to mimic real-world textures? What if you could feel when the cursor was over a button, or passing into a new window? There could be different tap patterns for normal versus destructive buttons, and so forth. The shadowing of windows and GUI elements? Those would literally be felt when moving the cursor around on screen. To most people this would be a cool feature (and sure could be something game and creative app developers take advantage of) albeit one even I may grow tired of and disable, but would have the potential to be life-changing for anyone who really cannot see the cursor quite as well. This being added to OS X’s Accessibility features would be just amazing and also show that the haptic technology truly has reached a point of maturity it definitely doesn’t have on OS X today.

There are still inconsistencies between this technology across Apple’s platforms that I feel also need to be smoothed out. Among other things, Live Photos show their life when 3D-tapped. Yet force clicking them in Photos on OS X doesn’t do the same thing (in fact, can you even see the live part of Live Photos in the app?). My point is, name of feature aside, regular users expect this level of deep feature parity and continuity between their devices, not just the higher level each Apple device is a phone (quite literally if you’re on AT&T), can access your text messages, and so forth. These are different teams, I get that, but we maybe shouldn’t expect deep advancements on Taptic integrations in OS X before more things even out across the ecosystem. On the flip side, OS X Safari has deep Force Touch APIs but Mobile Safari doesn’t have any 3D Touch equivalent to. Why seems strange indeed, because certainly the iPhone 6S(+) could handle them. I really might just integrate such APIs into websites I write (some of) the code for if such things had wider availability.

It is also worth remembering that most Apple laptops don’t have this hardware in them (only the recent MacBooks and retina MacBook Pros do), and few desktop users in the scheme of how many of those Macs are around have this trackpad yet. Also, OS X El Capitan did come out with the laptops having this hardware, but even the Magic Trackpad 2 came a week or more later, so technically OS X 10.12 will be the first OS to be released after every Mac has the potential for Force Touch and Taptic hardware being a main input device. Perhaps we will see some advancements along these lines when the next OS versions are announced this June. But for now, more so than on iOS or watchOS, Force Touch and Haptic feedback on OS X remain somewhat of a niche feature. It will take both the hardware being ubiquitous and an OS under development from day one with any Mac being able to have the hardware such that it is given more attention at the OS level before we’re likely to see this new frontier of OS X interaction truly mature.

That said, I for one love having such an input/output device, even if the pure-haptic features are far and few between and the rest has all just become second-nature already. Feel free to comment on my thoughts regarding the potential Force Touch and the Taptic Engine have in OS X, or to add your own. I’d love to hear what others who are using a Force Touch trackpad think.